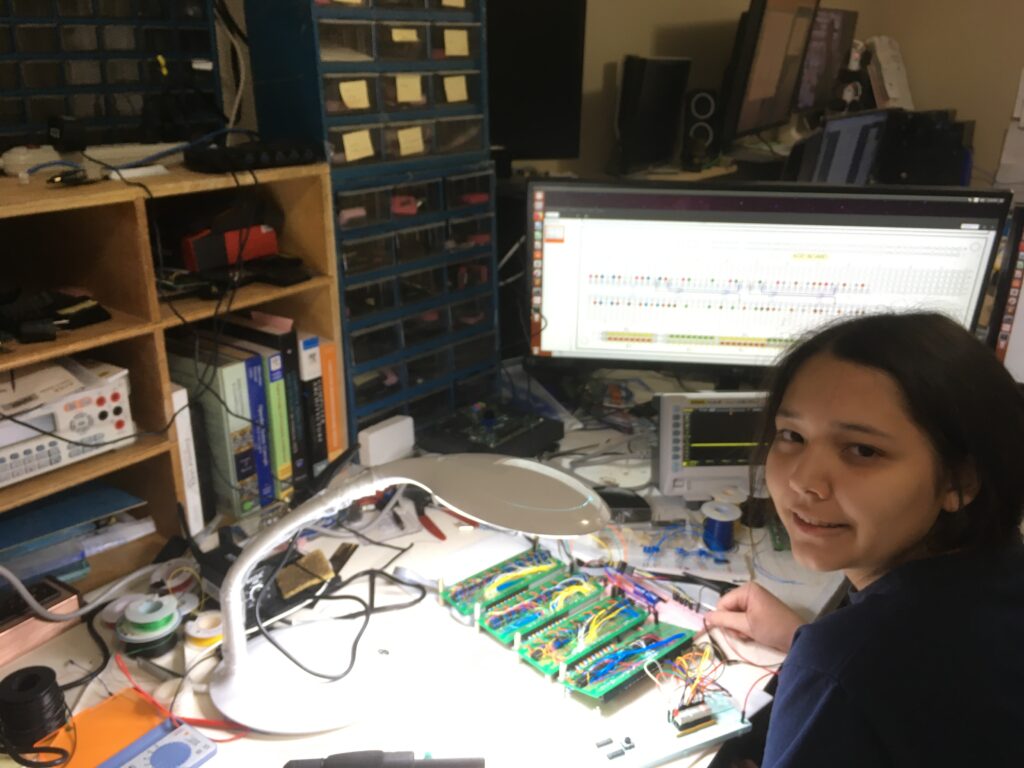

I am Carnegie Mellon University sophomore who is passionate about Computer Architecture.

Why is Computer Architecture Important?

It was back in 1943 that Neural Networks were invented. Eighty years later, it becomes one of the hottest, new technologies, not because of any new discovery, but because computers’ speed has finally made it feasible. I look forward to when my driver’s license becomes obsolete, and the stress and danger are taken away by self-driving cars.

It was back in 1943 that Neural Networks were invented. Eighty years later, it becomes one of the hottest, new technologies, not because of any new discovery, but because computers’ speed has finally made it feasible. I look forward to when my driver’s license becomes obsolete, and the stress and danger are taken away by self-driving cars.

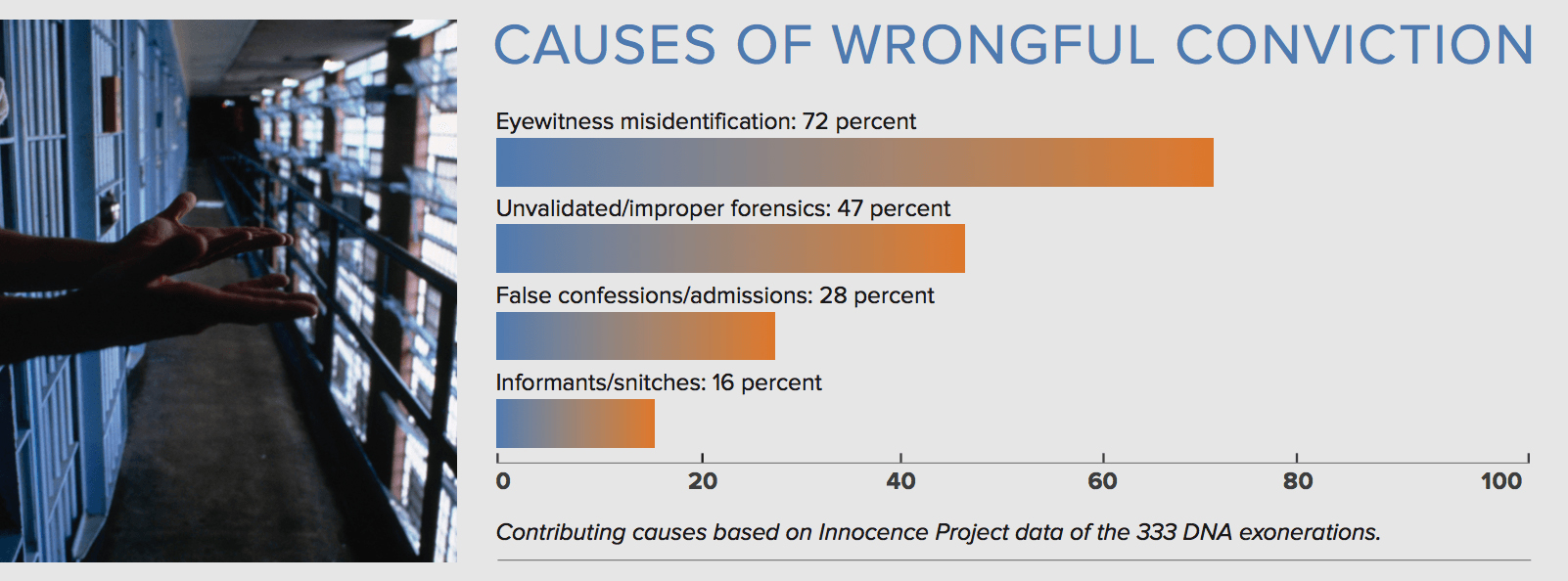

The sequencing of genes would have been an insurmountable task if it was not for high-speed computation. The first one took thirteen years back in 2003, but it now takes two days to sequence an individual’s DNA due to computational power. This technology has not only extended many people’s lives and cured ailments, but it also has exonerated many innocent people unjustly incarcerated by ambitious prosecuting attorneys.

The sequencing of genes would have been an insurmountable task if it was not for high-speed computation. The first one took thirteen years back in 2003, but it now takes two days to sequence an individual’s DNA due to computational power. This technology has not only extended many people’s lives and cured ailments, but it also has exonerated many innocent people unjustly incarcerated by ambitious prosecuting attorneys.

The computers that make cell phones possible give us a measure of safety and security. When lost or stranded alongside the road, we are no longer at the mercy of strangers. In the 70s and 80s, I have heard one of the greatest fears of women was to be stranded late at night alongside a deserted highway.

Computational abilities have contributed greatly to humankind, making many other technologies possible. Granted, people complain about their kid’s disassociation. Still, I believe they have been the source of many more magical improvements to society, providing more value to people’s lives than anything else.

My Computer Architecture Vision

Every new generation takes a fresh perspective on the world. The field of computer architecture is in the midst of a major paradigm shift. Processor speed comes with miniaturization, yet we now measure transistors in widths of atoms. They can’t get smaller. New solutions are emerging, and perhaps the standard von Neumann machine will no longer be in the limelight. In their Turing Lecture, Hennessy and Patterson called this “The Golden Age of Computer Architecture.” With more powerful development tools and the lower cost to prototype chips, the future will be application-specific processors. It is already happening:

- DSPs – Digital Signal Processors

- FPGAs – Field Programmable Gate Arrays

- GPUs – Graphic Processing Units

- TPUs – Tensor Processing Units

- QPUs – Quantum Processing Units

Emerging are new architectures changing how we think of processing. GPUs are an interesting case; once used only for calculating the display of textures and shadowing, then popularized by providing faster gaming, it has now morphed into General Purpose Graphics Processing Units (GPGPUs) composed of thousands of small processors working together to digest large datasets.

In 2019, I visited the London Museum of Science, and I was impressed by their collection of application-specific computers.

Electronic Storm Surge Modelling Machine |

Phillips Economics Analog Computer |

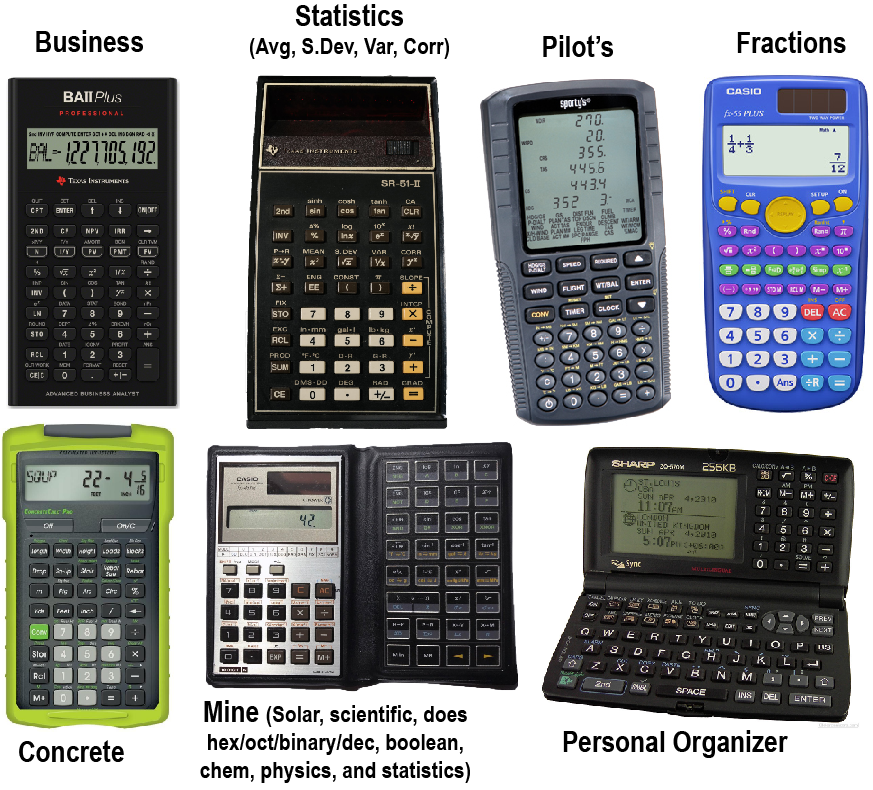

At first, calculators were simple, generic adding machines. But over time, they have been architected into more application-specific devices, including the one you can get from HomeDepot that calculates the amount and size of rebar needed in a concrete pour.

Likewise, the computers of the future will be application-specific architectures.

Squaring2

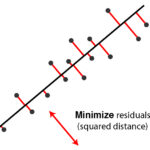

Whenever someone touches data, it involves a square. Why? It all comes down to two foundational mathematical tools, 1) the method for determining how far away one data point is from another, and 2) the magnification of a measurement.

Everyone takes a math course in middle school, and they ask you to calculate the distance between two points in space. How do you do that? You apply the Pythagorean theorem: A2 + B2 = C2. In data analysis, you evaluate the distance of one measure is from the average or to one or more other data points.

The other fundamental principle underlying mathematics is the Sum of Squares, which measures how well your analysis evaluates data. It uses the formula ∑(Yi – Ýi )2 where the actual value (Yi ) is compared against the estimated value (Ýi ), or ∑(xi – x̅)2 where values (xi ) are compared against an average (x̅), both using squares to accentuate or magnify difference.

How are these square intensive calculations computed? Using multipliers, not the faster squarer.

In the 1980s, Reduced Instruction Set Computers (RISC) became popular. David Patterson proved that general-purpose processors would be faster if you got rid of everything that wasn’t absolutely necessary. Packing the logic into a smaller footprint meant that signals didn’t have as far to travel, creating faster computation. Since a square can be calculated using a multiply (with identical operands), it was considered unnecessary, even if it was faster. But did we throw the baby out with the bathwater? With new emerging technology, perhaps we need to take another look at the squarer.