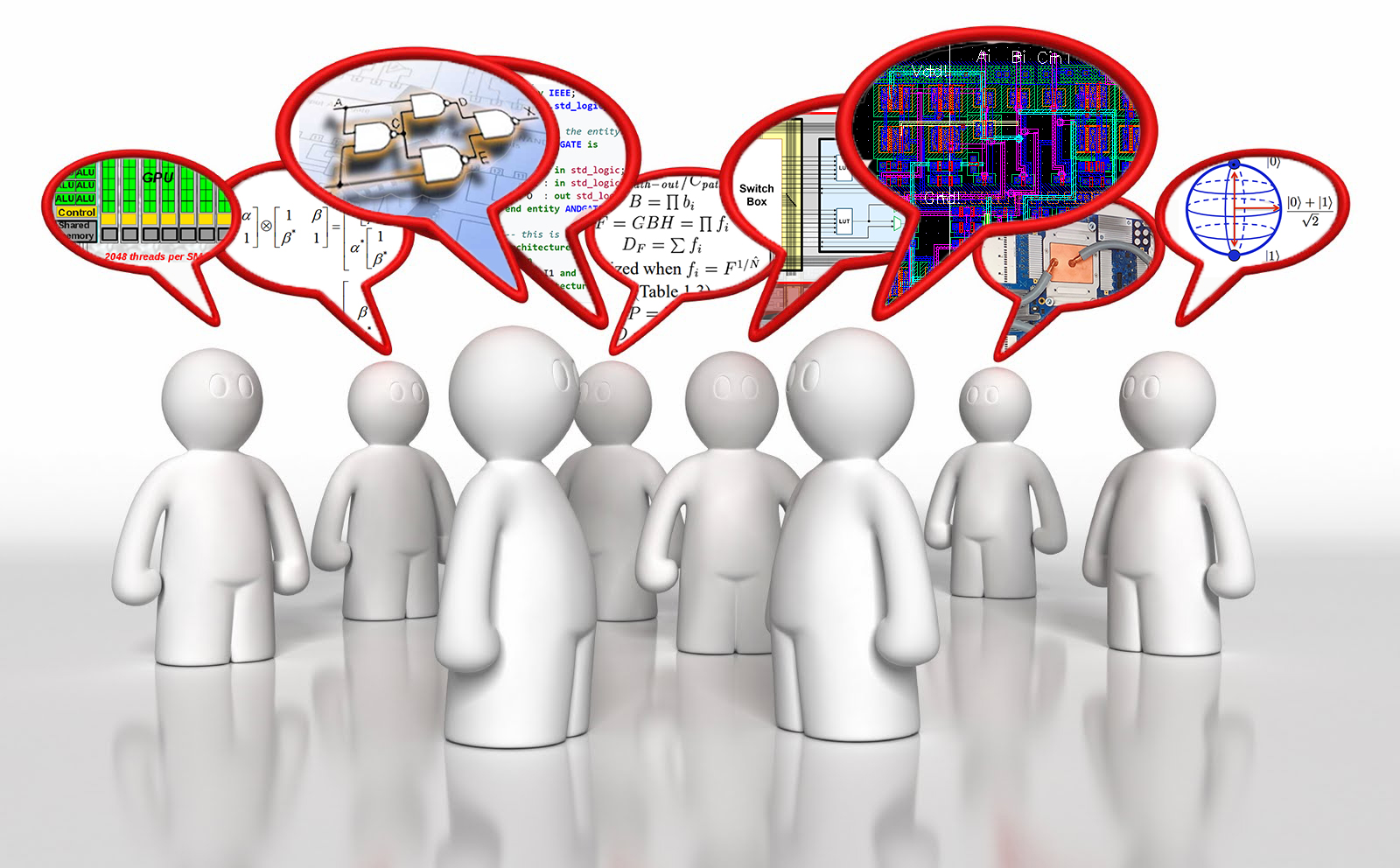

Some people climb mountains, some explore caves, but I love exploring the verge of knowledge. I love stepping beyond the edge, going places where others gave up and where no footprints are seen.

Some people climb mountains, some explore caves, but I love exploring the verge of knowledge. I love stepping beyond the edge, going places where others gave up and where no footprints are seen.

When I was twelve, after scoring a top grade on the Computer Science AP test, I realized that, for me, programming was boring. Algorithms seemed too passé and rote.

To let you know a little bit about me, I have always been a nuts-and-bolts kind of girl. I like to understand how things work under the hood. So after the AP test, it wasn’t unusual when I began reading Digital Design by Harris and Harris. However, I found I lacked the electronics knowledge needed to understand these concepts fully. I knew there were gaps where I needed outside instruction.

To let you know a little bit about me, I have always been a nuts-and-bolts kind of girl. I like to understand how things work under the hood. So after the AP test, it wasn’t unusual when I began reading Digital Design by Harris and Harris. However, I found I lacked the electronics knowledge needed to understand these concepts fully. I knew there were gaps where I needed outside instruction.

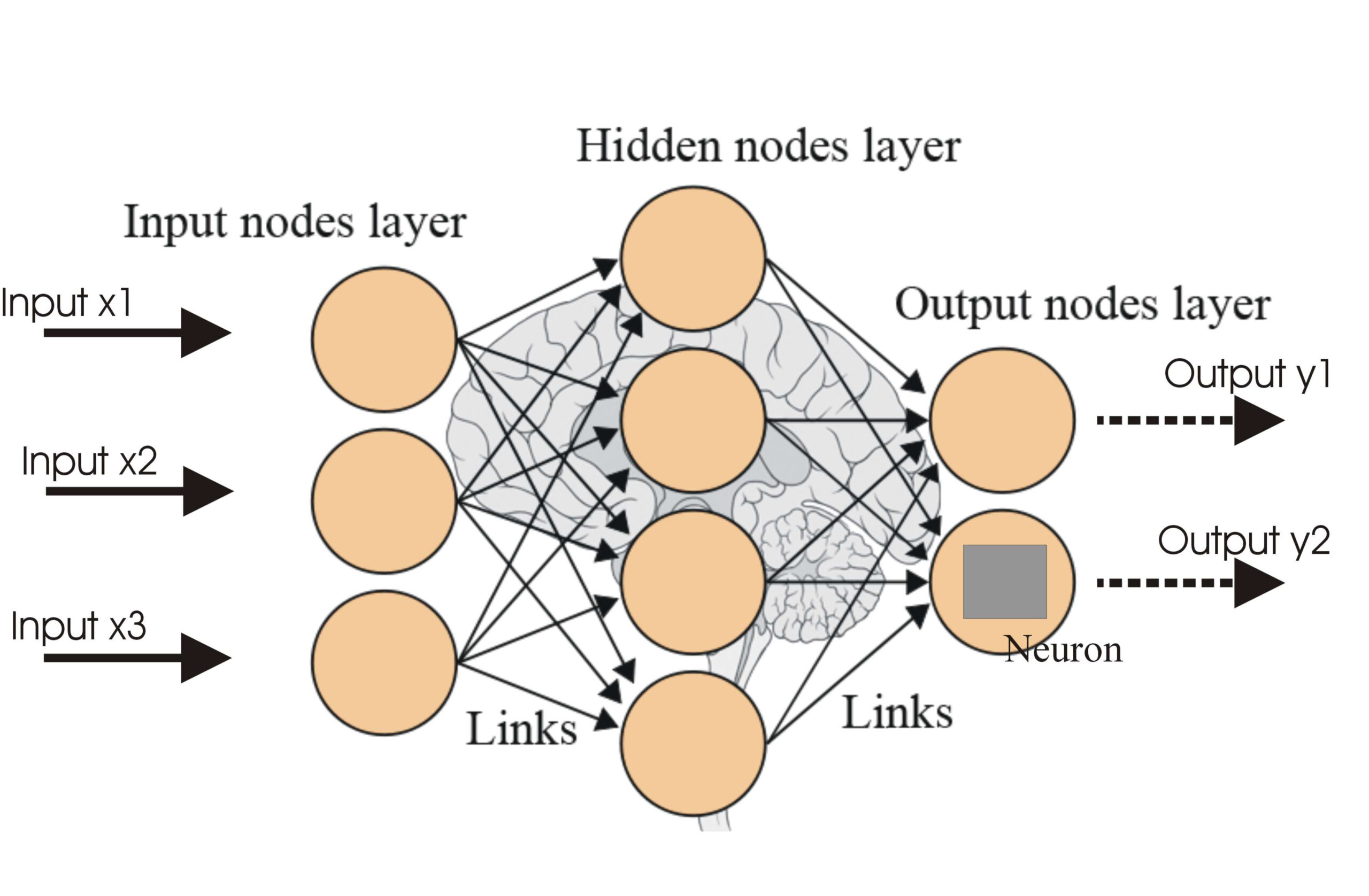

I heard a lot of talk about how Machine Learning is revolutionizing the world, so I decided to explore by taking a Coursera/Stanford class and reading numerous research papers. I was inspired by Prof. Geoffrey Hinton, who had the perspective of many decades of research, often saying, “We tried that a long time ago and found it did not work,” and pointing out “Convoluted Neural Nets are broken because they don’t take into account relative position of objects.” Sadly, too many of his gems fall on deaf ears. Artificial Intelligence is interesting, but it doesn’t inspire me.

I heard a lot of talk about how Machine Learning is revolutionizing the world, so I decided to explore by taking a Coursera/Stanford class and reading numerous research papers. I was inspired by Prof. Geoffrey Hinton, who had the perspective of many decades of research, often saying, “We tried that a long time ago and found it did not work,” and pointing out “Convoluted Neural Nets are broken because they don’t take into account relative position of objects.” Sadly, too many of his gems fall on deaf ears. Artificial Intelligence is interesting, but it doesn’t inspire me.

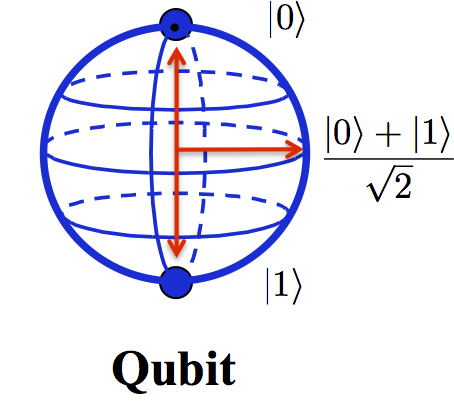

Recently, I also explored Quantum Computing by taking a class from IBM. At the end of the class, there was a panel discussion, and one of the experts (Dr. Steve Girvin from Yale) gave his parting advice: “A lot of people are asking about Quantum Machine Learning, but the algorithms require an exponentially large number of qubits. That is not going to happen in the near future.” Then the moderator spoke up, “That is sad. My area of research is Quantum Machine Learning,” and later,

Recently, I also explored Quantum Computing by taking a class from IBM. At the end of the class, there was a panel discussion, and one of the experts (Dr. Steve Girvin from Yale) gave his parting advice: “A lot of people are asking about Quantum Machine Learning, but the algorithms require an exponentially large number of qubits. That is not going to happen in the near future.” Then the moderator spoke up, “That is sad. My area of research is Quantum Machine Learning,” and later,  “I am not going to let anyone discourage me. I started in Finance, then went to Machine Learning, and now I apply my experience to Quantum Computing.” With so many chasing the current hot area of research, they are, in effect, being transients by not settling into a research home of their own,

“I am not going to let anyone discourage me. I started in Finance, then went to Machine Learning, and now I apply my experience to Quantum Computing.” With so many chasing the current hot area of research, they are, in effect, being transients by not settling into a research home of their own,

I have determined not to be sidetracked by popular trends because Computer Architecture and Computational Arithmetic simply makes me happy. Everyone else can go after the flashy and latest bobble. I have found my home and passion.

My Big Breakthrough

It was Christmas, 2018. I was downstairs in the basement in my mad-scientist laboratory. I could hear the clanging of pots and pans and the footsteps of my dad and little brother above in the kitchen. They were making a mess that I knew I would have to clean up. I had just finished my first actual Digital Design class at the local college–South Dakota School of Mines & Technology– and my final exam included algebra to optimize Boolean logic. In front of me was my science fair project that displayed a Mandelbrot Set using my Raspberry Pi. I used frame-buffers to quickly display the screen and wrote a mouse controller to drive it. Mandelbrots require an incredible number of square calculations. So, I began thinking about how I might speed up a square.

I Googled “squarers” and came across a paper by T.C. Chen, who illustrated his Boolean logic that reduced the number of partial products almost in half. Then, I came across another paper by Strandberg, who took advantage of the commonality between the partial products to further reduce the calculation. I looked at his results and noticed even more commonality.

“Hmmm…could I do even better?” I wondered.

I started applying some Boolean Algebra, and the partial products became ugly–like a long, wrinkly larva–unappealing to all. But, after looking at it for some time, I noticed even more commonality with this ugly beast and saw the potential to combine two of the partial product terms. I applied more Boolean Algebra, and the math simplified itself beautifully and fluttered away into an optimized squarer.

“Have I just discovered something new?” I asked myself over and over, my excitement growing.  So I went back to Googling. I looked and looked and couldn’t find anything that talked about the optimizations I just uncovered. However, I did come across a “Request for Papers” form for an upcoming Computational Arithmetic symposium.

So I went back to Googling. I looked and looked and couldn’t find anything that talked about the optimizations I just uncovered. However, I did come across a “Request for Papers” form for an upcoming Computational Arithmetic symposium.

“Hmm…could I write a research paper worthy of publishing?” I mused. I had sure looked at enough of them. “I doubt it, but I’m going to give it a try.” All I really want is for knowledgeable people to tell me if I have discovered something novel. The deadline was January 20th. I didn’t have very long. I worked hard, putting in long days and working into the night. I barely finished it before the deadline.

A few weeks later, I got a reply that my paper was not up to their publishing standards. Even though I was disappointed, I appreciated their feedback, so I sent them a note saying, “Thank you for looking at my paper.” In that note, I mentioned that I was a sophomore in high school. (Later, I found out they thought I was extremely arrogant for not listing my advising professor on the paper.)

The next day, I got a single-word reply, “WOW!”

A few days later, I was surprised to get an email from the organizer asking me if I would like to speak at the ARITH symposium in Kyoto, Japan. How exciting! I jumped at the chance. “Yes! Definitely!” I replied, and the plan was in motion. They sponsored my trip, and Martin Langhammer from Intel worked with me to polish my talk.

My Dad and I flew into Tokyo and tried to check into that night’s hotel. The front desk told us our reservations were for yesterday. Oops! We hadn’t taken into account the International Date Line. The symposium started the next day! We rushed to the train station and caught the next bullet train to Kyoto. My dad contacted the hotel in Kyoto. The front desk was about to close, but one nice woman offered to stay around to give us our key and check us in. Once we got to Kyoto, we got lost. We got turned around trying to find the street to our hotel. Eventually, we found our way to the hotel, but we were two hours late. We were incredibly grateful to the patient woman at the hotel. I was exhausted from dragging my luggage through the streets of Kyoto and went directly to sleep.

My Dad and I flew into Tokyo and tried to check into that night’s hotel. The front desk told us our reservations were for yesterday. Oops! We hadn’t taken into account the International Date Line. The symposium started the next day! We rushed to the train station and caught the next bullet train to Kyoto. My dad contacted the hotel in Kyoto. The front desk was about to close, but one nice woman offered to stay around to give us our key and check us in. Once we got to Kyoto, we got lost. We got turned around trying to find the street to our hotel. Eventually, we found our way to the hotel, but we were two hours late. We were incredibly grateful to the patient woman at the hotel. I was exhausted from dragging my luggage through the streets of Kyoto and went directly to sleep.

The next day, while waiting for my turn to talk, I was SO nervous. My Dad said he almost pulled the plug, seeing my neurotic fidgeting and the extreme bouncing of my knee. “I thought you were going to explode,” he said later.

But I managed to get up there on my shaky legs, and then, all my many hours of practice paid off. I delivered the talk almost perfectly–exactly as planned. Dad recorded it on his phone in the back of the room. You can hear two professors with their French accents saying, “Did you hear that? Sixteen years old?” “What?” asked the other man. “She is sixteen years old!”

My talk was the last one before breaking for lunch. To my surprise, I got a lot of attention. I had a poster display, and many people came up asking challenging questions. Even though I was incredibly intimidated (actually, I was scared to death and very tongue-tied), I was extremely excited. These people were the architects of present-day computers! They were professors from all over the world. I felt like I had finally met my people. People who shared my passion for architecting the computer of tomorrow.

My talk was the last one before breaking for lunch. To my surprise, I got a lot of attention. I had a poster display, and many people came up asking challenging questions. Even though I was incredibly intimidated (actually, I was scared to death and very tongue-tied), I was extremely excited. These people were the architects of present-day computers! They were professors from all over the world. I felt like I had finally met my people. People who shared my passion for architecting the computer of tomorrow.

My life suddenly became very surreal.

My life suddenly became very surreal.

Intel made it clear they wanted me. However, I would first need to get my Ph.D., which, realistically, may take ten years.

At the same time, many professors were talking to me about coming to their universities. Dr. Paolo Ienne was very persistent in asking me to come to work at EPFL in Switzerland. (I didn’t think it would be possible since I was only sixteen.) They had summer research internships that were usually used for upper-division students. At that time, the summer session was almost over. However, he amazingly made it happen. Because of my age, they set it up for my Dad and little brother to join me. They got us a beautiful apartment that overlooked Lake LeMann and had a panoramic view of the Alps. It was only a five-minute walk to the beach, although I was too busy to enjoy it. While there, I was able to audit a couple of classes (VLSI & Scala), and I assisted in a research project building a parallel Network-on-Chip (NoC) for transferring data from DDR memory to cache. We used the Chisel language to implement this on an FPGA.

After years of working basically alone, I’ve got to say,

I am SO happy to have found my people!

It is hard to find others in South Dakota who comprehend the extreme edge of technology that I get to play in now, like a kid in her sandbox. These days, I collaborate with my friends around the world, and I love every minute of it.

Persisting with my research, I have recently come up with my greatest discovery. I believe I have discovered:

A faster, smaller multiplier!

Yes! It is SO exciting! I can hardly wait to see what comes next.